There’s no way around it: I’m a fan of procrastinating. But I try to procrastinate in ways that teach me something or that help me learn… which is why one of the things I like to do when procrastinating is figuring out algebraic relationships of things. Which brings us to this nice little result that taught me something a tad bit deeper than I expected. Let me begin.

A few months ago an interesting question was posed on Twitter inquiring about the relationship between the test of association for contingency tables and logistic regression. It was a very interesting thread, but within it there were a couple of tweets that captured my attention, which you can find here in case you’d like to know the context. I do reproduce them here just for the sake of keeping this blog entry self-contained:

Now, because I’m a huge stats nerd, I know that the test of association can be fit as a logistic regression model, and I also know that the

coefficient (i.e., the Pearson correlation between two binary variables) follows the relationship

when working with 2×2 tables. What I don’t know (and had actually never seen worked out) is why?

So the first thing I do is go to the Wikipedia entry for the coefficient. And there is a reference there. One of the classics of psychometrics, Guilford’s (1936) Psychometric Methods. I have it. I go and check it and on p.432 I see:

That’s it. No proof, no explanation. Just a throwaway statement that these things are equal without elaboration. Perhaps Lord & Novick’s (1968) Statistical Theories of Mental Test Scores can offer some guidance? I mean, that book has everything psychometrics in it. I go. I check. And I find this on p.336:

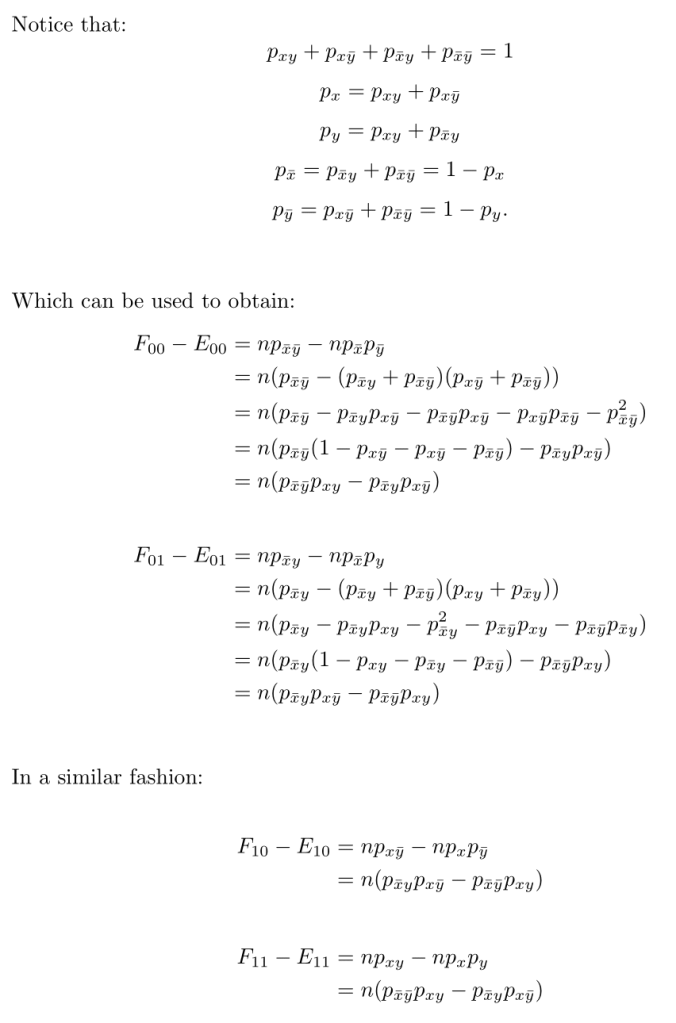

Again, no luck. But a clue has been offered: “It can be shown after tedious algebra (…)”. If you’ve read old math/stats books/articles/etc. (they don’t mince words when describing things), then you know the chances of finding out a formal proof of this are slim to none. This is one of those things that amounts to what I call a “bookkeeping proof”. The kind of proof that relies mostly on being careful with how things are defined, which subscripts should be used where, etc., but the technique is really just pushing around algebraic symbols. Which is perfect for me, because that’s what I like to do to procrastinate! So I decided that it would probably be a good idea to work this out and leave it available somewhere (like on this blog), to make sure people in the future don’t have to work through tedious algebra. So…here it is! And to put the cherry on top, it did help me realize something that I’d never seen before.

WordPress makes it difficult (at least for me) to include large chunks of LaTeX, so I’ll take the more humble approach of uploading the proof as pictures.

And that’s it! Now you may be wondering, what is the insight that this little exercise left me with? It’s actually very subtle, but also very interesting:

Generally speaking, we teach our Intro Stats students that a correlation of 0 does not imply independence, right? Easy example: Take , define

and try to find the Pearson correlation between those two. Although they are perfectly dependent (Y is a function of X, after all) the non-monotonic relationship induced by the squaring (i.e., a parabola), “breaks” the linearity that the Pearson correlation is capturing. There is an important exception to this, though: jointly normally-distributed random variables (i.e., a multivariate normal distribution). In this case, a correlation of 0 does imply independence among the components of the multivariate structure. HOWEVER, notice the proof above. The (non-parametric) measure of dependency captured by the

statistic, can also be expressed in terms of a correlation coefficient: the

coefficient. Which means that for bivariate, Bernoulli distributed random variables, a correlation of 0 necessarily implies independence between the binary variables.

So now the list grows. Joint distributions for which a Pearson correlation of 0 implies independence:

(*) The multivariate normal

(*) The bivariate Bernoulli (but only the bivariate case).